Blog

Apache NiFi and Progress MarkLogic

- 10 April, 2024

- By Dave Cassel

- No Comments

For years, I've used Apache NiFi as a data orchestration tool. Based on NiFi's built-in scheduler, we pull data from upstream sources, send it to Progress MarkLogic, and trigger MarkLogic to take certain actions on that data. We also use NiFi to ask MarkLogic for information that is ready to process and take action based on that. This post looks at best practices for that communication.

The 4V Nexus search application has a few examples of this. 4V Nexus allows content administrators to add content sources (SharePoint, Confluence, Google Drive, Windows File Shares, etc), specify how often they should be ingested, and what credentials to use when connecting. Sometimes a content admin may want to remove a content source. Removing the source from the list tracked in MarkLogic is simple, but if we have brought in a large number of documents from that source, we may not be able to remove them all in a single transaction. Apache NiFi helps with this -- ask MarkLogic for a list of affected documents, process them, then report back to MarkLogic when all content has been cleaned up. At that last stage, we tell MarkLogic to remove the source from the list.

That process has a few points where NiFi is talking to MarkLogic. There are multiple ways to do this. Let's take a look at some of the tradeoffs.

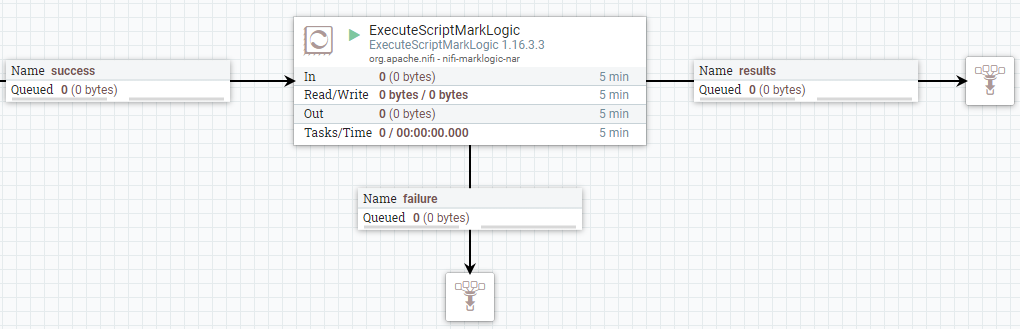

ExecuteScriptMarkLogic

The simplest way for NiFi to talk to MarkLogic is to use the ExecuteScriptMarkLogic processor. With this approach, we put code into the processor itself, which is then run in MarkLogic as an eval. This makes for pretty easy testing -- if you don't quite get the result you were hoping for, you change the code and send another flow file through it. This allows for rapid interaction, similar to working with Query Console.

This works well for exploratory coding, but has some downsides when it comes to lasting code in your application.

The code itself lives in NiFi. You'll likely commit this code to the NiFi Registry, but it is disconnected from the rest of your application's code (likely in git). More significantly, it's hard to test this code. The only way you can test it is by running flow files through the processor with the various inputs you'd like to test.

My preferred approach when using ExecuteScriptMarkLogic is to push the code into a library. The code that remains in NiFi then looks something like this:

const myLib = require('/lib/myLib.sjs');

myLib.callMyFunction('#{someParam}', '${flowFileAttribute}');

The processor's job is simply to convert what NiFi knows into parameters to the function. (This approach is good for APIs, too.)

Now that the code is in a library, I can make these same types of calls in Query Console. Even better -- I can write unit tests, setting up a variety of scenarios and prevent regressions when making changes.

There's also the option to use ExecuteScriptMarkLogic's module path option. This also relies on code that has been deployed to MarkLogic. I like it better, but still relies on the security point below.

CallRestExtensionMarkLogic

While really convenient, ExecuteScriptMarkLogic isn't the only way to call MarkLogic. The CallRestExtensionMarkLogic processor lets you call a MarkLogic REST API extension. At some level, this is pretty similar to the code above that imports a library and calls a function. There is one big difference to be conscious of. With this approach, the user that NiFi uses to talk to MarkLogic will need REST-related privileges, such as http://marklogic.com/xdmp/privileges/rest-reader and http://marklogic.com/xdmp/privileges/rest-writer. On the other hand, ExecuteScriptMarkLogic uses the /v1/eval endpoint, which requires privileges like http://marklogic.com/xdmp/privileges/xdmp-eval, privileges that have pretty broad scope.

It's worth noting that you should use CallRestExtensionMarkLogic, not ExtensionCallMarkLogic. Several improvements led to deprecating the latter, the most significant of which is much better error handling.

Conclusion

Both of these processors have their place. The privileges needed for ExecuteScriptMarkLogic are typically locked down to a role intended for NiFi's use, but this should be considered for your application. Make sure the bulk of your code is set up to be properly version controlled and -- most importantly -- well tested!

Share this post:

4V Services works with development teams to boost their knowledge and capabilities. Contact us today to talk about how we can help you succeed!

For years, I've used Apache NiFi as a data orchestration tool. Based on NiFi's built-in scheduler, we pull data from...