String interpolation in Apache NiFi

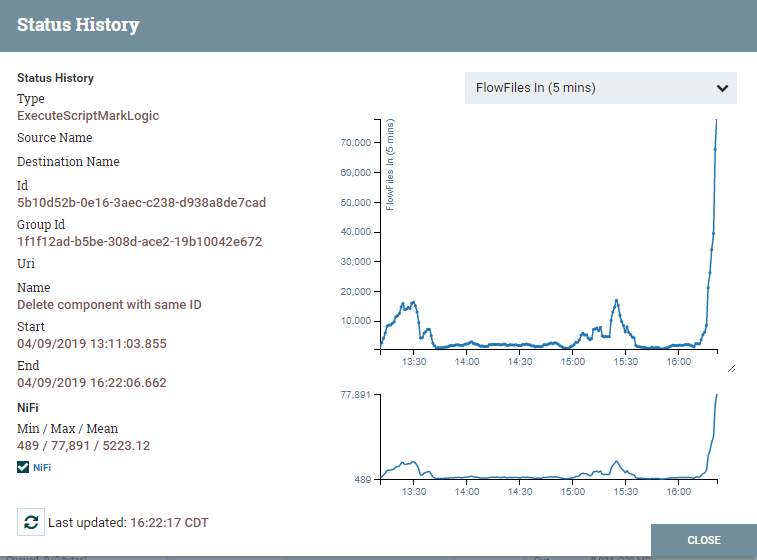

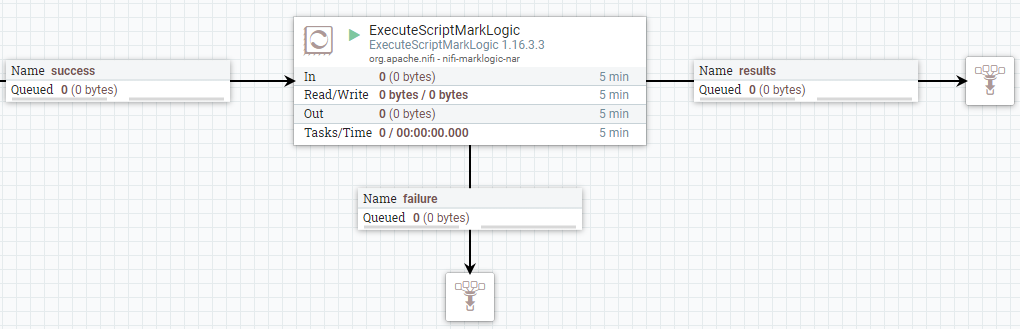

In one of my recent posts, I talked about ExecuteScriptMarkLogic, a handy processor for getting Apache NiFi to talk to Progress MarkLogic. I’d like to share a couple little “gotchas” that we’ve run into before. I’m using ExecuteScriptMarkLogic to illustrate the point, but it applies to any processor where we use JavaScript code in the processor.

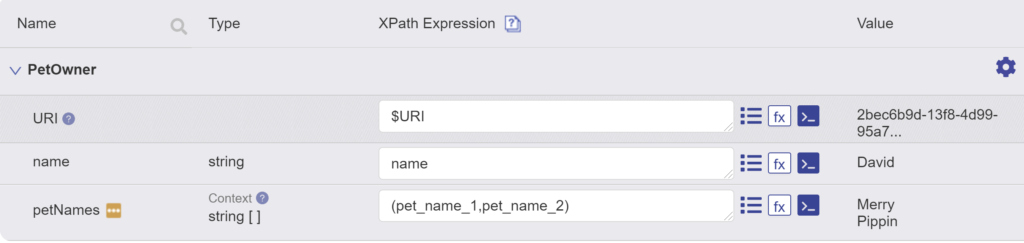

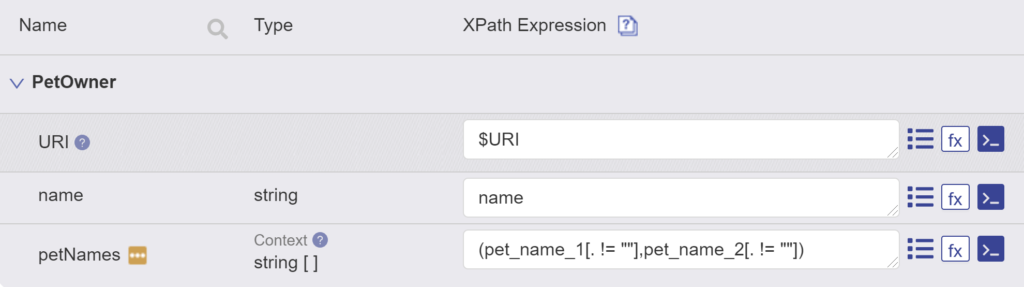

In NiFi’s expression language, we use the following syntax to refer to a flowfile attribute value: "${myAttribute}". This lets us use attribute values in the code we run in MarkLogic. Here’s an example:

'use strict';

const myLib = require('/lib/myLib.sjs');

myLib.myFunction('${myAttribute}');

There are a couple things to note here.

Direct Replacement

With the code above, the ${myAttribute} will be replaced by whatever value is found in the corresponding attribute of the flowfile — if any. Note that I surrounded the reference with quotation marks. It’s easy to think of attribute values as strings, but the value will simply replace the reference without adding anything. If the attribute value is “foo” and I left the quotes out of my code, I’d end up with myLib.myFunction(foo). That’s invalid. The quotes ensure that the value will be presented as a string. If there is no value, we’ll get an empty string.

Of course, it might be that I want a number, not a string. Does that mean I can just leave off the quotes and be done? You could, but you’re taking a chance. If there’s no value, you aren’t getting a zero, you’re just getting … nothing. Consider myFunction(${myAttribute). If myAttribute has no value, this code will be evaluated as myFunction(). That may not be what you want. Worst case, your code might return a wrong answer instead of failing. If a missing or non-numeric value is something you want to handle, you can do this:

'use strict';

const myLib = require('/lib/myLib.sjs');

const myValue = parseInt('${myAttribute}', 10);

if (!isNaN(myValue)) {

// do the processing

} else {

// do alternative processing or error logging, perhaps including throw

}

String Interpolation

Another fun little gotcha — NiFi attributes aren’t the only place this syntax gets used. JavaScript has a string interpolation feature:

const myValue = 5;

const count = `1, 2, ... ${myValue}! (Three, sir!)`;

At the end of this code, count will have a string value of “1, 2, … 5! (Three, sir!)”. However, if you run this code in ExecuteScriptMarkLogic, NiFi gets to it first. When it sees the ${myValue}, it will do a direct replacement with the value of the myValue attribute. Since this happens before JavaScript evaluates the code, JavaScript doesn’t have the chance to do the string interpolation. The resulting string will depend on the value of that flowfile attribute, which may not even exist.

Happily there’s a simple work around — don’t use string interpolation in this context:

const myValue = 5; const count = '1, 2, ... ' + myValue + '! (Three, sir!)';

This way NiFi never sees the ${} notation and you get the expected result.

Conclusion

Using NiFi flowfile attribute values to drive processing in MarkLogic is a great example of NiFi’s data orchestration. As developers, we need to be conscious of exactly what we’re asking the software to do. Hopefully these tips help you avoid some subtle errors and get you one step closer to production!

By Dave Cassel

By Dave Cassel